Creating a Data Quality Rules Management Repository

In this guide you will learn the importance of creating a data quality rules process in your data migration project. Plus, you’ll learn how to create an app for storing, reporting and coordinating your rules.

If your project does not have a structured approach to discovering, measuring and resolving data quality rules then the likelihood is your project will increase its likelihood of a significant delay or outright failure

Note: Want more Data Quality Tutorials? Check out Data Quality Pro, our sister site with the largest collection of hands-on tutorials, data quality guides and support for Data Quality on the internet.

Part 1: What are data quality rules?

Every system needs checks and balances to run smoothly.

Information systems are no different. There are literally thousands of data quality rules in existence throughout even the most modest of businesses. These rules dictate how the information should be stored and handled in order to maintain the current business operations.

Sadly, these rules are rarely recorded and documented. If they are, they’re often out-of-sync with reality.

If you walked into any organisation and asked for a definitive set of rules that are enforced on their data you would mostly find some scant design documentation and if you're lucky one or two domain experts who can still decipher the complex application rules that govern the data.

Data quality rules provide two main components that help us ensure our legacy data is fit enough to survive the arduous journey ahead.

Data Quality Measurement

Prioritised Improvement Activities

Data Quality Measurement

A data quality measure simply gives us a metric for gauging the health of our data.

For example, if we were migrating hospital records we could create a data quality rule that states “there should be no duplicate patient information”.

An example metric could be 127 patients were found to be duplicated out of a list of 10,000.

Data Quality Improvement Activities

The second part of our data quality rule is designed to help us manage any defects in the data.

In our previous example, the migration may well fail if we leave those customers in as duplicates instead of correctly merging together. So our improvement activities would define the steps needed to deduplicate and combine the data.

Tip: Check out Data Quality Rules Process for Data Migration, featuring John Morris

Part 2: Why are Data Quality Rules so Beneficial to Data Migration?

Like all great ideas, data quality rules are often very simple to explain.

Unfortunately, they are very rarely adopted in a structured manner and this causes one of the main causes of project failure in most migrations.

A lot of projects will perform data profiling or testing (an important element of data quality rules management) but not in a unified, coordinated way.

Projects are often reactive in nature, only when issues are found during the ETL process or in the final load testing for example will they document issues and attempt to resolve them.

When implemented correctly, the process of discovering and managing data quality rules should take centre stage on your migration. This pivotal role of data quality rules makes them so important and useful to the project. They become the "glue" that binds the business sponsors and stakeholders to the project and ensures they take ownership of the issues the data presents.

Data can rarely be "cleansed" or improved without significant business input so the business must take an active role in the data quality rules process.

Part 3: How do we Create Data Quality Rules?

The key to creating useful and accurate data quality rules is to get the right people into a workshop.

Taking a "techie" approach by hammering away with profiling tools without consulting the right business domain experts will just give you a whole lot of wonderful charts and statistics that are meaningless.

Together, data quality analysis and business experts make a wonderful partnership so plan your data quality rules workshops in advance, bring the right level of intelligence and analysis into the sessions and define the rules that are important to your project.

Start with Legacy Rules

There is a temptation to launch into defining the rules that will map our data to the target platform.

This is a mistake, we need to first define the rules that govern our legacy environment to ensure local consistency.

We examine the local quality of our data based on some basic conceptual and logical data models that define what data items we need for the migration.

For example, if we define a basic customer to service relationship rule that is in evidence in our legacy business, how many occurrences are there across our systems that breaks this rule?

Even though our data model may theoretically prevent this kind of defect from occurring, in reality our knowledge workers are adept at conjuring up weird and wonderful ways to massage the data into a defective rule.

Legacy system consolidation is a key challenge. If you think there are defective rules in a single system, just wait until you throw multiple databases into the mix!

Once again, define your logical model that has been agreed with the business as "the way we do business".

Create the necessary data quality rules that reflect that thinking and then examine the data across all the legacy systems to see where rules are broken.

A recent example I found was where a data quality rule stipulated that a company account should only have one promotional offer per year.

That rule was well and truly broken when we consolidated all of the different customer databases together and found that in one instance a single company had been duplicated 48 times, meaning they had received over 200 discounted orders for the year!

So, defining data quality rules are not only sound practice for data migration projects, they will save you money in your ongoing data quality activities too.

As we uncover our rules and discover their defects, we need to record the activities required to either mitigate or eliminate the problems.

Once again, the business has to make these decisions in unison with the project team, it is not our data, the data stakeholders and sponsors need to determine what level of resource and funding they can provide in order to bring the data up to a satisfactory level.

Wherever possible, aim to fix the problem at source as opposed to a "quick-fix" in the migration technology itself. This way you get benefits back into the business and simplify the migration logic.

Legacy to Target Rules

Okay, we've got our legacy environment in order with a healthy set of rules and well managed data, now we need to see how fit the data is to migrate and support our new business environment.

The key here is to look not just at the target schema but the target business model.

Will the new business services place increased pressure on our legacy data to perform new functions?

Your data could be fit for purpose in the old world but chronically unfit for driving automated processes in the new world.

So create rules that not only uncovers gaps between the legacy and target models but also the target business functions.

Going Agile

This is not a waterfall process, it requires an iterative approach, repeatedly learning and adapting as you uncover more rules and the target environment begins to take shape.

The key is not to stop and wait for things like the target schema, get started on day 1 of your project and do what you can.

Iterative "sprints" are useful in this respect. By creating time-boxed discovery activities you get the project used to delivering benefits early and often, don't wait until testing to uncover these issues.

Data Quality Rules Template

Guidelines for using the Data Quality Rules Template

There is a far more detailed explanation of Data Quality Rules in John Morris’ Practical Data Migration available from Amazon.

In addition, John has supplied us with several templates (see below) that you can use as part of your entire data quality rules process.

But before you go further, please read some of the advisory notes regarding how to create Data Quality Rules Templates:

All Data Issues will be recorded on a DQR form and given a DQR ID (DQR Example attached).

The DQR will be forwarded to the DQR administrator who will action urgent ones but will hold over the majority for consideration by the DQR board

The DQR Board will meet on a regular (weekly?) basis.

The DQR board will prioritise new DQR (including closing a DQR) and review outstanding DQR

Each DQR will have a Qualitative and a Quantitative section

The Qualitative section is the first extended statement of the issue and can be impressionistic.

The Quantitative section will contain a deliverable that can be measured so that we will know the extent of the problem

The Method statement describes how the issue will be resolved.

The Tasks section breaks down the Method statement, where necessary, into individual steps, each of which will have an owner, a clear deliverable and a date for delivery.

The Metrics statement shows how we will bring together the measured deliverables in the Tasks section into a single figure that we can track up and down the project.

What goes into a Data Quality Rule?

An example of a DQR document can be found below. It holds:

Name, Scope, Author, Date, ID, Priority and Status – These fields identify each occurrence of a DQR and allow consolidated reporting.

Qualitative Statement – the first impressionistic statement of the problem

Quantitative Statement – A measurement of the issue under consideration

Method Statement - An outline of what the project is going to do (even if that is “Nothing”) about the issue.

Tasks List – Where necessary break the Method Statement down into a series of steps with deliverables, persons responsible and completion dates

Metrics – what will be measured to ensure that this DQR has been successfully completed. All the metrics will be consolidated into composite figures for onward reporting.

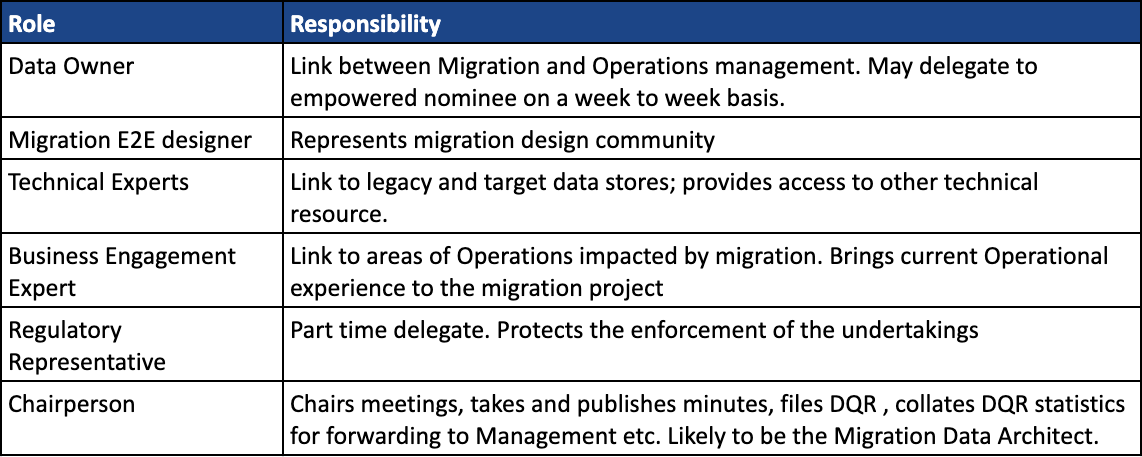

What are the Roles and Responsibilities?

About the Author

Dylan Jones

Co-Founder/Contributor - Data Migration Pro

Principal/Coach - myDataBrand

Dylan is the former editor and co-founder of Data Migration Pro. A former Data Migration Consultant, he has 20+ years experience of helping organisations deliver complex data migration, data quality and other data-driven initiatives.

He is now the founder and principal at myDataBrand, a specialist coaching, training and advisory firm that helps specialist data management consultancies and software vendors attract more clients.