Data Migration Testing Strategy:

A Complete Guide to Data Migration Testing Success

Data Migration Testing requires a comprehensive strategy in order to reduce risk and deliver a successful migration for the end users.

In this guide, David Katzoff, Managing Director of Valiance Partners, a specialist data migration technology and service provider, outlines a blueprint for designing an effective data migration testing strategy.

David rounds off the article with a useful checklist of recommendations that you can use to benchmark your own approach.

Part 1: How to Implement an Effective Data Migration Testing Strategy

Compliance and business risk plays a significant role in the implementation of corporate information systems.

The risks associated with these systems are, in general, well known.

However, as part of the implementation process, many of these information systems will be populated with legacy data and the compliance and business risks associated with data and content migrations are not necessarily understood.

In this context, risks associated with data migration are a direct result of migration error. Further, industry testing strategies to mitigate such risk, or more specifically data migration error, lack consistency and are far from deterministic.

This article offers thoughts and recommendations on how to create a more robust and consistent data migration testing strategy.

Before diving into the detail, a bit of background - Valiance Partners has tested hundreds of data and content migrations, primarily in FDA regulated industries (pharmaceuticals, medical devices, bio-technologies and food products), manufacturing and autos.

The information presented here includes some of the lessons learned from our clients’ quality control and the actual error history from testing the migrations of hundreds of thousands of fields and terabytes of content.

The recommended approach to designing migration testing strategies is to document one’s risk, the likelihood of occurrence, and then define the means to mitigate risk via testing where appropriate. The identification of risks is tricky and much of the process will be specific to the system being migrated.

Let’s review two systems to illustrate this point:

In the first case, migrating financial data in retail banking is typically defined by high volume migrations (10's, or 100's of millions of records) where the source to destination records are quite similar and involve minimal translation and little if any data enrichment.

For a second example, consider a consumer products company’s complaints management. These systems are typically not mature and newer implementations, and their associated business processes, have to adapt to varying business and compliance requirements. These systems have modest volume in comparison (10's, or 100's of thousand of records) with complex translations, and data enrichment to complete the newer record as they are migrated.

In both cases, getting the migrated data accurately represented in the destination system is critical. However, the process by which accuracy is defined will vary significantly between these two systems and their associated migrations.

In the first case, the financial services industry has evolved to the point where data interchange standards exist, which simplifies this process greatly.

In the case where complaint management data is migrated, significantly more upfront analysis will be required to “best fit” the legacy data in the new system.

This analysis will derive data enrichment to fill in incomplete records, identify data cleansing requirements through pre-migration analysis, dry runs the migration process and verify the results sting before understanding the final data migration requirements.

System specific characteristics aside, there are several options for minimizing the occurrence of migration error through testing. The following discussion reviews these options and presents a set of recommendations for consideration.

Part 2: Data Migration Testing - What are the options?

The de facto approach to testing data and content migrations relies upon sampling, where some subset of random data or content is selected and inspected to ensure the migration was completed “as designed”.

Those that have tested migrations using this approach are familiar with the typical iterative test, debug and retest method, where subsequent executions of the testing process reveal different error conditions as new samples are reviewed.

Sampling works, but is reliant upon an acceptable level of error and an assumption pertaining to repeatability. An acceptable level of error implies that less than 100% of the data will be migrated without error and the level of error is inversely proportionate to the number of samples tested (refer to sampling standards such as ANSI/ASQ Z1.4).

As per the assumption on repeatability, the fact that many migrations require four, five or more iterations of testing with differing results implies that one of the key tenets of sampling is not upheld, i.e., “non-conformities occur randomly and with statistical independence…”.

Even with these shortcomings, sampling has a role in a well defined testing strategy, but what are the other testing options?

The following lists options for testing by the phase of the migration process:

Pre-Data Migration Testing

These tests occur early in the migration process, before any migration, even migration for testing purposes, is completed. The pre-migration testing options include:

Verify scope of source systems and data with user community and IT. Verification should include data to be included as well as excluded and, if applicable, tied to the specific queries being used for the migration.

Define the source to target high-level mappings for each category of data or content and verify that the desired type has been defined in the destination system.

Verify destination system data requirements such as the field names, field type, mandatory fields, valid value lists and other field-level validation checks.

Using the source to destination mappings, test the source data against the requirements of the destination system. For example, if the destination system has a mandatory field, ensure that the appropriate source is not null, or if the destination system field has a list of valid values, test to ensure that the appropriate source fields contain these valid values.

Test the fields that uniquely link source and target records and ensure that there is a definitive mapping between the record sets

Test source and target system connections from the migration platform.

Test tool configuration against the migration specification which can often be completed via black box testing on a field –by- field basis. If clever, testing here can also be used to verify that a migration specification’s mappings are complete and accurate.

Formal Data Migration Design Review

Conduct a formal design review of the migration specification when the pre-migration testing in near complete, or during the earliest stages of the migration tool configuration.

The specification should include:

A definition of the source systems

The source system’s data sets and queries

The mappings between the source system fields and the destination system

Number of source records

Number of source systems records created per unit time (to be used to define the migration timing and downtime

Identification of supplementary sources

Data cleansing requirements

Performance requirements

Testing requirements

The formal design review should include representatives from the appropriate user communities, IT and management.

The outcome of a formal design review should include a list of open issues, the means to close each issue and approve the migration specification and a process to maintain the specification in sync with the migration tool configuration (which seems to continuously change until the production migration).

Post-Data Migration Testing

Once a migration has been executed, additional end to end testing can be executed.

Expect a significant sum of errors to be identified during the initial test runs although it will be minimized if sufficient pre-migration testing is well executed. Post-migration is typically performed in a test environment and includes:

Test the throughput of the migration process (number of records per unit time). This testing will be used to verify that the planned downtime is sufficient. For planning purposes, consider the time to verify that the migration process was completed successfully.

Compare Migrated Records to Records Generated by the Destination System – Ensure that migrated records are complete and of the appropriate context.

Summary Verification – There are several techniques that provide summary information including record counts and checksums. Here, the number of records migrated is compiled from the destination system and then compared to the number of records migrated. This approach provides only summary information and if any issue exists, it does not often provide insight to an issue’s root cause.

Compare Migrated Records to Sources – Tests should verify that fields’ values are migrated as per the migration specification. In short, source values and the field level mappings are used to calculate the expected results at the destination. This testing can be completed using sampling if appropriate or if the migration includes data that poses significant business or compliance risk, 100% of the migrated data can be verified using an automated testing tool.

The advantages of the automated approach include the ability to identify errors that are less likely to occur (the proverbial needles in a haystack). Additionally, as an automated testing tool can be configured in parallel with the configuration of the migration tool, the ability to test 100% of the migrated data is available immediately following the first test migration.

When compared to sampling approaches, it is easy to see that automated testing saves significant time and minimizes the typical iterative test, debug and retest found with sampling.

Migrated content has special considerations.

For those cases where content is being migrated without change, testing should verify the integrity of the content is maintained and the content is associated with the correct destination record. This can be completed using sampling or as already described, automated tools can be used to verify 100% of the result.

Data Migration User Acceptance Testing

Functional subtleties related to the co-mingling of migrated data and data created in the destination system may be difficult to identify early in the migration process.

User acceptance testing provides an opportunity for the user community to interact with legacy data in the destination system prior to production release, and most often, this is the first such opportunity for the users.

Attention should be given to reporting, downstream feeds, and other system processes that rely on migrated data.

Production Migration

All of the testing completed prior to the production migration does not guarantee that the production process will be completed without error.

Challenges seen at this point include procedural errors and at times, production system configuration errors. If an automated testing tool has been used for post migration testing of data and content, executing another testing run is straightforward and recommended.

If an automated approach had not been used, some level of sampling or summary verification is still recommended.

Part 3: Data Migration Test Strategy Design Recommendations

In the context of data and content migrations, business and compliance risks are a direct result of data migration error but a thorough testing strategy minimizes the likelihood of data and content migration errors.

The list below provides a set of recommendations to define such a testing strategy for a specific system:

Establish a comprehensive migration team, including representatives from the user community, IT and management. Verify the appropriate level of experience for each team member and train as required on data migration principles, the source and the destination system.

Analyze business and compliance risks with the specific systems being migrated. These risks should become the basis for the data migration testing strategy.

Create, formally review and manage a complete migration specification – While it’s easy to state, very few migrations take this step.

Verify the scope of the migration with the user community and IT. Understand that the scope of the migration may be refined over time as pre- and post-migration testing may reveal shortcomings of this initial scope.

Identify (or predict) likely sources of migration error and define specific testing strategies to identify and remediate these errors. This gets easier with experience and the error categories and conditions listed here provide a good starting point.

Use the field-level source to destination mappings to establish data requirements for the source system. Use these data requirements to complete pre-migration testing. If necessary, cleanse or supplement the source data as necessary.

Complete an appropriate level of post migration testing. For migrations where errors need to be minimized, 100% verification using an automated tool is recommended. Ensure that this automated testing tool is independent of the migration tool.

Look closely at the ROI of automated testing if there is some concern about the costs, time commitment or the iterative nature of migration verification via sampling

Complete User Acceptance Testing with migrated data. This approach tends to identify application errors with data that has been migrated as designed.

Test the production run. If an automated testing tool was chosen, it is likely that 100% of the migrated data can be tested here with minimal incremental cost or downtime. If a manual testing approach is being used, complete a summary verification.

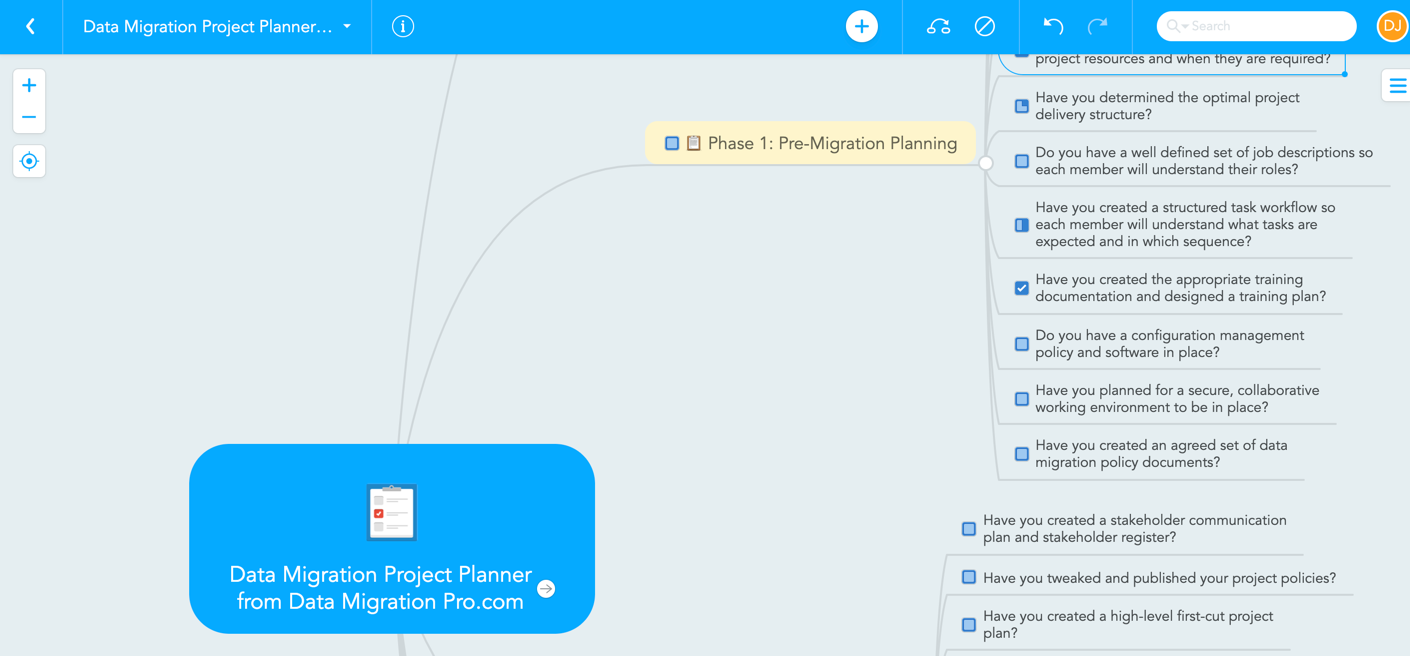

Want to get a data migration checklist, planning spreadsheet and MindMap, to help you plan your next data migration?

About the Author

David Katzoff

Managing Director - Valiance Partners, Inc.

David Katzoff brings more than 25 years of software product development, applications engineering, systems integration, project management and compliant business solutions design experience to his role. Much of that experience is concentrated in enterprise-class research, manufacturing and business and marketing systems for premier clients in the life sciences, financial services and manufacturing sectors.

As a co-founder of Valiance, David led the initial development of Valiance’s core software products TRUcompare and TRUmigrate.

His thorough understanding of the supported platforms, user interface design and novel testing techniques directly resulted in Valiance’s unique product direction path.

Visit Valiance Partners website for more details: https://www.valiancepartners.com/

Are you an expert on this topic? Would you like to be featured on Data Migration Pro?

Please add your details by clicking on the button below, complete the form, and our editorial team will reach out to you with further details.