Extreme data Pruning

How to Cut Effort, Complexity and Cost in your next Data Migration

In this best-practices guide we explore the need for data pruning in order to keep costs and delivery timelines to a minimum on our data migration projects.

With some simple tools and techniques you may just find that your projects are suffering from too much dead wood in the legacy environment.It’s time to cut back those data items you don’t need and create a far fitter and leaner data migration strategy.

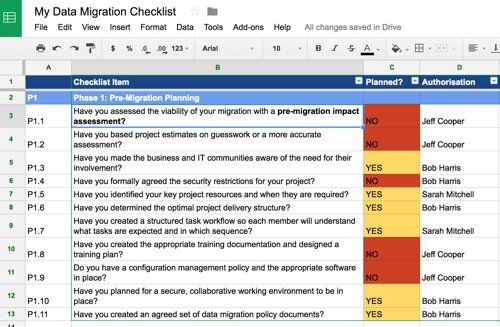

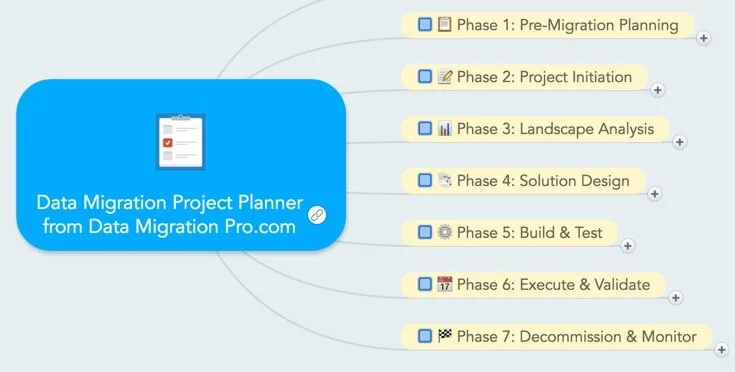

Get a free checklist toolkit: Project Planner Spreadsheet + MindMap

Serious about delivering a successful data migration?

Download the exact data migration checklist toolkit I use on client engagements and learn advanced tactics for data migration planning.

Toolkit includes:

✅ Project Planning Spreadsheet (for Excel/Google Sheets)

✅ Interactive Online MindMap (great for navigation)

✅ Pre-populated example templates (help you get started quickly)

Why do you need to prune your data during data migration?

Within the Data Migration Pro community we are currently exploring the issue of data migration scoping and it’s importance on the overall project.

One useful approach is to have an early review of all legacy data to assess what is valid or viable for migration.

The purpose of the gate phase is to reject all data from scope, unless a valid business or technical reason can be provided for including it.

As a gardener trims away the “dead wood” so must the data migration analyst look for opportunities to trim away data that offers little value to the business.

Many organisations assume that vast amounts of legacy data need to be in scope and proceed with the task of mapping the old and new worlds together.

Each data item that plays even a minor role in your migration can add significant time and cost to a project regardless of technology sophistication.

If you are following best-practice you will need to understand, profile and document attribute structures, content and relationships to gauge their fitness for migration for example and that’s before you have even coded any transformations or validated the migrated data.

Issues with the data invariably require pre-migration cleanse, pre-migration monitoring and even manual activities to get that seemingly innocuous attribute or record of information fit for migration.

But the problem is worse than that for some organisations.

Their project is slipping, time is running out so they take a chance hoping the data will get through. If it’s a bit “dirty”, “we’ll fix it in the target” won’t we?

No, you won’t.

Just because data is fit enough to support the old world does not mean it will support the new world and its new business services. One-touch automation, SOA, federated architectures, real-time warehousing – the list goes on.

And so will your migration if you do not prune your data effectively.

The new breed of system applications are less forgiving than those old legacy systems that allowed field overloading and free-form text. So now you’re even further behind in the project and your daily migration fallout rate is rising steadily and you can’t cope with the defect backlog.

So what’s the lesson?

Introducing The “Data Gate” Review

Create a data gate review on day 1.

This assumes that no data, nothing, absolutely zero, is valid for migration.

Start with a blank sheet of paper.

Start to identify some the key business objects from a conceptual view before you even touch a database. Create a model of key business data areas such as “customer”, “equipment”, “service”, “product” and model their relationships.

Drill deeper into this model and create a logical model for both legacy and target worlds. This will help analyse the gaps between legacy and target models, all this before you have even touched a database.

It is also time to explore the business processes to understand exactly what will be required of the new system.

Will there be greater pressure on the legacy data to have greater accuracy and integrity than previously? What wondrous new functions and processes are required in the new legacy app? These need to be validated against what the legacy data can reasonably support. (Remember those free-form text fields!)

Many companies have fallen foul of omitting this stage but it is a crucial one.

The old data may be accurate and correct, pass all profiling tests yet still fail to be fit for purpose if that new supply chain system requires the legacy objects to be bound by relationships or structures that are simply not achievable.

Simulate your data migration (look before you leap)

Now it’s time to roll out your data profiling, data quality improvement, model re-engineering and business intelligence tools.

Many people misunderstand the role of tools. They are not just required to audit legacy data and find metadata but to simulate the data migration.

They will ensure, without you having to build the migration platform, whether your data is capable of migration. Never assume that your data can actually be migrated and never wait until you move into migration coding to find out.

Profile your datastores and find the physical entities and relationships that have some relation to the higher-level business objects and business data areas that you have previously modeled.

Remember Data pruning does not mean you have to ignore important datasets.

For example, do not be afraid to provide an amnesty for all those hidden spreadsheets that the engineering team still use because you know deep down they always preferred Excel to the old inventory system!

Spot the obvious entities that should be in scope and then profile in greater detail to find what object attributes and records really are critical to your migration. Focus on the natural, primary and foreign keys first – the building blocks of data migration.

Now re-engineer the physical schemas and derive logical models so you can validate against your earlier logical models as a reality check.

At this phase you will almost certainly require a data quality tool that can perform matching, deduplication, standardisation, auditing and monitoring.

Relying solely on profiling is only part of the process. How can you assess the relationship between engineering codes between two platforms without cleaning and standardising prior to the matching assessment? Invest in the next generation of data quality tools that combine the full array of data quality functions, they are essential for modest to large scale data migrations and will repay their investment many times over.

Another valuable tool is a business intelligence or data visualisation tool.

Do not underestimate the benefit of these for deriving knowledge from your data and engaging with the business. They help you link your data quality audits against business data so you can make a judgement in business terms. They are also vital in helping you prioritise cleansing effort and even identifying the priority of migrating datasets closer to runtime.

Always remember that tools will only get you so far, you need a subject matter expert to really interpret what the technology is telling you.

Attributes x Records = Effort

One tip is to think of an in-scope set of data as a 3 dimensional box.

The X dimension are for attributes and the Y dimension are for records.

The 3rd or Z dimension is effort due to data improvement, analysis, project management, coding, mapping – the list goes on.

Now, try and reduce the size of this box to the smallest possible size that will support the intended business function. By doing this you are instantly reducing one of the most critical dimensions – effort.

For example, do you really need to store all customer transaction history?

One customer was planning to migrate 5 years of customer history for accounts that were already closed. The reason provided was one of the most common – regulatory. After failing to provide a valid justification they simply archived all stale customer data in a permanent store saving months of effort and cost.

Some CRM systems log every single touch point with a customer, most of which are of no business value – get those records out of scope.

Some attributes are just historic relics and are no longer used – drop them from your scope immediately. If someone wants them in they must justify why.

Use data discovery tools to analyse system logs and observe which tables are witnessing high activity and which ones never get touched. This will tell you what the business is really using. If your system doesn’t provide this kind of detail get a DBA to monitor how many records are inserted or updated in your legacy tables as this can also provide vital information for determining what information is critical to business.

Show me the money - what’s the cost of not pruning your scope?

We can put a simple cost on the project cost and effort associated with data attributes to help explain the importance of rigorous pruning and scoping.

For example, it’s fair to assume that to migrate a single attribute of data will require at least 2 hours effort in discovery, defect diagnosis, improvement, mapping, transformation design, programming and final validation. Not including any remedial work if it fails at runtime (which if you have followed best-practice should never happen!)

Factor that against the number of records to be migrated for that attribute and you’ve got an additional cost rating as 100,000,000 records will take a lot longer to analyse, test and migrate than 1000 as the effort is non-linear.

Different attributes are more critical than others so create a factoring scale based on whether the attribute is part of a key for example.

You can now create a simple monetary value on every attribute, its importance and its volume. This is crude but it still provides a benchmark and in reality 2 hours effort per attribute is far less than what projects would typically spend.

(Note: The role of the data migration project manager is to create these type of forecasting calculations based on the organisations own performance at typical data migration tasks).

Using this discover-justify-accept process at least 50% of a legacy dataset “volume” can often be placed out of scope with some efficient pruning.

Let’s put those figures into some kind of context.

A legacy dataset consists of 100 physical entities, 15 attributes on average in each and let’s ignore record count and complexity factors for simplicity.

That equates roughly to 3,000 man hours.

Factor in an average of 6 man hours productivity per day and you’re looking at 500 working days to complete the migration.

Based on UK day rates that equates to £200K labour charges.

You have 3 team members to share the load so that gives you roughly 166 elapsed days before completion.

Take away 50% of attributes and we save ourselves £100K in labour costs.

But its actually better than this because complexity is non-linear.

Research has shown that when projects operate at full capacity (typical of most migration projects) then variations in the process can wreak havoc as vital resource is diverted from the delivering the migration to resolving attribute-related defects.

Research clearly shows that as variation and complexity rise, productivity drops sharply so you can expect major increases in project output for every unit of data you eliminate from scope.

Major data migration often witness severe delays as the sheer volume of data issues starts to overwhelm the core team if you do not have the correct resources and planning in place to cope with data volumes and complexity.

In Summary

Here are some take-home lessons:

All data should be out of scope, unless you can justify a valid business or technical reason for including it in the migration

Profiling and scoping should be done as part of the business case but if not it should start on day 1 of the application implementation, do not wait for the data migration project to formally start

Only use advanced data quality and scoping tools combined with subject matter expertise to make judgements on whether data is acceptable for migration. Never rely on simple SQL analysis or anecdotal evidence as they will not be able to perform effective “data migration simulation”

Use data quality tools that do more than data profiling. Matching, standardisation and cleanse for example are all invaluable features so don’t just rely on outdated column profiling tools

Use business intelligence, data visualisation, business rule discovery and model re-engineering tools whenever budgets permit as they will provide considerable value in gathering intelligence and performing simulations

Assign a value to datasets and explain in clear terms to the business why data equals increased costs, longer delays and reduced quality

Data vs effort is non-linear, the more data you take out the faster and cheaper your project will become so prune hard to reduce delays

Gather statistics and measures on how long it takes your team to deliver every aspect of the migration so you can learn and re-use this critical information in future migration scoping and planning activities