Data migration Go-Live Strategy:

What is it and why does it matter?

In this guide we take a look at some of the different combinations of data migration go-live strategies and what they mean from a technical and business perspective.

The landscape of data migration implementation options has changed in recent years so we thought a brief introduction to the different approaches would benefit our business and non-technical readers in particular.

Data Migration Go-Live Strategy: Options

There are essentially three main problem areas to focus on when creating your go-live strategy:

Business Cutover Strategy: Explains how the business will be ‘spun up’ into a go-live state.

Transitional Storage Strategy: Explains how the data will be ‘housed’ during the in-flight phase of the data.

Synchronisation Strategy: Explains how the source and target systems will be synchronised post-migration.

1: Business Cutover Strategy

Seamlessly switching your business functions from the old system to the new system is clearly important to the success of your migration.

You need the confidence to know that the new target environment is fully tested and can deliver the services your business demands before you make the ‘big leap’.

There can be little room for error in modern business. If the new system comes online and service is impacted then this bad news can spread rapidly, severely impacting customer confidence (and potentially your career).

When switching business users and customers from the old world to the new world there are essentially 3 options for the cutover strategy – big bang, incremental or parallel.

We’ll discuss these in the 3 cutover approaches that follow…

Approach #1: The ‘Big-Bang Cutover’ Data Migration Strategy

I remember my first big bang project not with fondness but with abject fear…

We had a 48-hour window to migrate a global customer database to a new platform. Like generals we had meticulously planned each phase and were confident the battle could be waged and won before the first users came online at 5am Monday morning.

So, we camped out, ordered pizzas, brewed the coffee and got the ball rolling. Things went well until we hit a bug in the migration logic at about 2am on Monday morning. It was one of those occasional gremlins that no amount of testing could have prevented.

Frantic hacks and tweaks from confused, exhausted, caffeine-riddled brains eventually got the data moving again and we hit the point of no return 2 hours later.

It was lauded as a complete success but not an experience I would wish on others.

I speak with a lot of Data Migration Pro readers who perform big-bang migrations regularly so I know that a lot of projects do succeed. We don’t have the stats but I would hazard a guess they are probably the most popular method of data migration but they can be a roller-coaster ride because of their “all-or-nothing” nature.

The concept of a big bang data migration is a simple one.

Build the data migration architecture and then move the data to the new target environment in one process. The business graces the project team with a sufficient window of opportunity to get it right and you hope and pray that you don’t need that fallback plan that you created. The window of opportunity is key here. You typically need a sufficient time box to successfully move the data to the target and this typically involves downtime to the business.

Some of the benefits of a big bang approach are:

No need to run two systems simultaneously. By moving the users in one activity you can get on with the job of shutting down the legacy environment and recouping the many cost benefits this affords.

It’s tried and trusted. Service providers and in-house data migration specialists typically cut their teeth on this style of migration so there is probably more of this kind of expertise floating around.

No synchronisation issues. With a big bang migration we don’t need to keep the old environment up to speed with any record updates, the business has effectively moved on and is now being driven entirely from the target platform.

There are drawbacks however:

Some businesses run without any window of opportunity. Internet businesses for example are 24/7 operations that realistically cannot be shut down for several days/hours to transfer data, they need to stay online continuously.

The associated risk of having a limited time-frame in which to migrate the data can lead to major impacts if the migration overruns.

Synchronisation may not be an issue but fallback strategies can be challenging particularly if issues are found some time after the migration event.

Due to the risks associated with big-bang, many organisations are now adopting a lower risk approach using one of the options below.

Approach #2: The ‘Incremental Cutover’ Data Migration Strategy

An incremental cutover approach reduces the risk of a big bang approach by only migrating discrete parts of the business and associated data.

Perhaps your business has discrete divisions split by geography or function. You may chose to move all customers in a particular country over a period of successive weekends for example. If you are a telecoms or utilities firm, you may choose to move equipment within certain physical sites.

Incremental cutovers de-risk the project because if you hit problems it is often far easier to roll back a smaller subset of data than an entire data store using a big-bang approach.

Sounds a great concept?

It is, but it can also be challenging to execute effectively due to the complexity of the underlying applications, data and business processes.

For example, I may be a customer in region X but I also have additional services in our satellite offices in region Y…

How will you be able to manage the business implication of migrating my account from the old environment to the new system?

How will the users respond to my query if I wish to place an order during the migration window?

How will the legacy and target applications know which system to source their data from?

The challenge is to manage these intricate relationships both within the business processes and the underling data linkages.

Borrowing a term from John Morris, this will require considerable “data transitional rules” – temporary measures to ensure that the business and data can adequately serve the customer.

The key to an incremental migration is to thoroughly understand the business and data landscape to identify what ‘slices and dices’ can be used for your data migration strategy.

Approach #3: The ‘Parallel Cutover’ Data Migration Strategy

Parallel running is a popular approach to data migration because it can greatly de-risk the outcome.

The concept is simple, we move the data to the target environment but ensure the data in each system remains current. This typically involves dual-keying or some other form of data synchronisation (also refer to section on Synchronisation Strategy below).

For example, if we move the data in our customer call centre database to a new platform we could get our users to enter the data in both the old and the new systems. Clearly the downside here is cost and customer impact. If we utilise only our existing staff then that must result in a service hit somewhere in the business.

A parallel running migration does not necessarily require data to be moved in one big-bang exercise, it can also support incremental migrations.

The benefits are that we can run each system in parallel for a period of time so the business can fully validate and sign-off the platform, safe in the knowledge that it completely meets their needs.

The downside is cost and technical complexity, maintaining two environments is clearly far more expensive than maintaining one and the integration capabilities required to maintain a parallel coordination of data can be high.

2: Transitional Storage Strategy

This is a technical term that basically addresses how will we store the data while we’re moving it from the old world to the new world. The particular method adopted can have different ramifications for the business.

There are two main options:

Temporary Storage Solution: This is commonly referred to as a ‘staging area’. It allows us to extract data from the legacy system(s) and perform various transformations and manipulations to get it in a fit state for loading into the target environment. During the time the data is in this storage “tank” the data is effectively “in-limbo”. It can’t really be used in either the old world or the new world so this solution typically requires downtime by the business as in a busy, real-time business, every minute the data is in staging it is drifting out of date.

Real-time Solution: This approach eliminates the need for a storage area by simply porting the data across in one single transaction. This has the benefit of zero downtime to the business but it can be complex to deliver as a state management facility is required to “remember” where the individual data items are now held.

3: Synchronisation Strategy

In order to support options like the parallel approach featured earlier, then we need some form of synchronisation architecture to keep data aligned between source and target environments.

There needs to be a flow of information from the system which has changed to the system which therefore out of date information. This is also vital if we want to de-risk the project by having a fallback solution that can be implemented hours, days or even weeks after the initial migration.

There are basically two types of synchronisation: Mono-directional and Bi-directional.

Mono-Directional: This is a strategy where perhaps our target system synchronises any new changes it receives back to the legacy system. This is useful because we may discover issues with the target environment which means we need to redirect business services back to the old system at some point after the cutover. Alternatively we may migrate our data in a big-bang to the target environment but keep the business pointing to the old legacy system while we test the new architecture. We could use mono-directional synchronisation to keep the target system refreshed with any changes that occur after the initial migration.

Bi-Directional: In this approach we allow changes in the old system to flow to the new target system and changes to the target system to flow back to the old system. We would typically implement this solution if we were performing an incremental migration with parallel running for example. For example, if my customer record in the new system receives updates then we may need to post these changes back to the old system that still performs some legacy services there on my same account. This gives maximum flexibility but obviously requires a more complex architecture.

4: Important Considerations

The data migration go-live strategy you adopt must be driven primarily by your business needs.

Different needs require different approaches and it really pays to understand what those options are so you can make the right decisions at the project inception.

For example, if you run a 24/7 internet business that simply cannot afford downtime, you need to address how you will manage the transitional storage strategy and the synchronisation strategy. If your data will be sitting in a staging area for two days and you have no means of updating the target system of any changes during that period then the migration will have effectively failed.

If you are performing an FDA regulated migration you may need to perform exhaustive testing and audit trails post migration to ensure that every single data item, attribute and relationship has been correctly migrated. This kind of requirement may necessitate a period of parallel running to ensure that you have sufficient time to adequately test and fallback if required.

Perhaps you want to migrate discrete sets of customers in particular regions over a 6 month period using an incremental approach. The interrelationship of the business and data models may necessitate a bi-directional synchronisation strategy to ensure that updates to customers in the target system flow to the source system and vice versa.

As a technician, all of this matters to you because you have to code a solution that delivers both the business needs and a risk management solution that ensures service continuity.

On the business side, this is of importance to you because many data migration projects fail to deliver on their objectives.

Data migration is inherently risky so you need to be confident that your technical peers are delivering a solution that fits your current and future business needs.

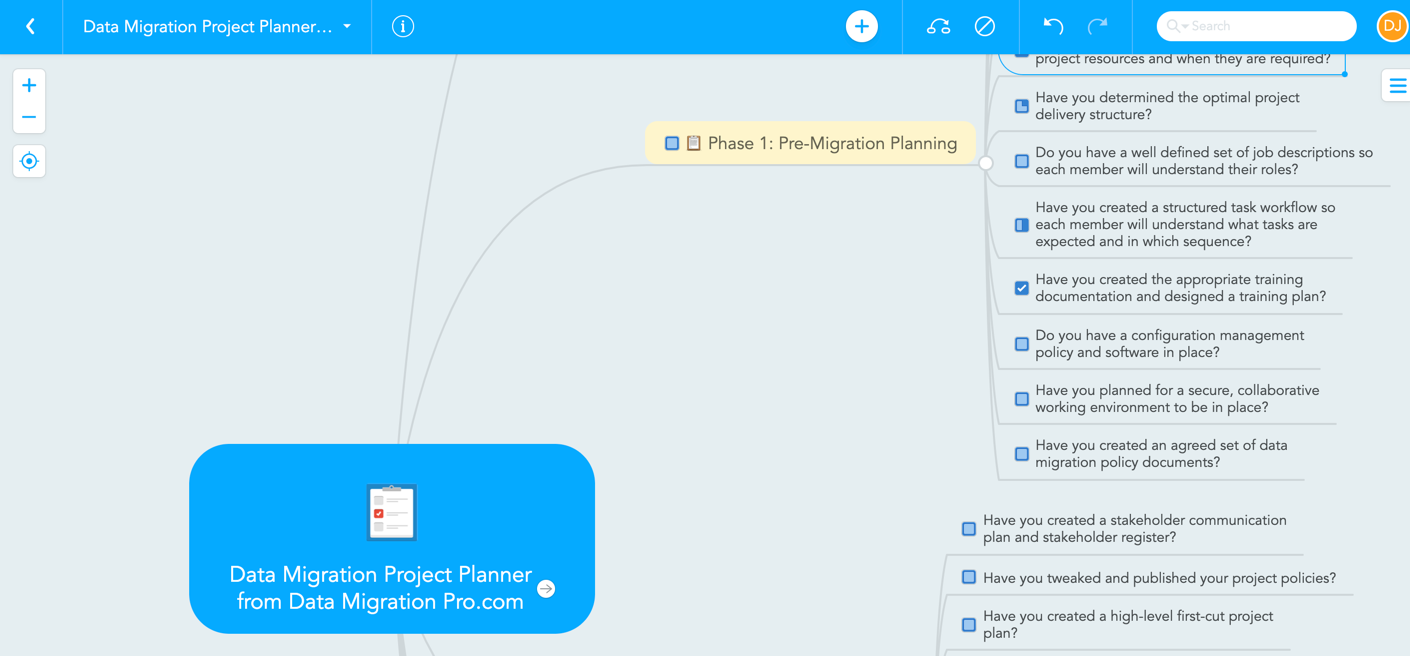

Want to get a data migration checklist, planning spreadsheet and MindMap, to help you plan your next data migration?

About the Author

Dylan Jones

Co-Founder/Contributor - Data Migration Pro

Principal/Coach - myDataBrand

Dylan is the former editor and co-founder of Data Migration Pro. A former Data Migration Consultant, he has 20+ years experience of helping organisations deliver complex data migration, data quality and other data-driven initiatives.

He is now the founder and principal at myDataBrand, a specialist coaching, training and advisory firm that helps specialist data management consultancies and software vendors attract more clients.